What’s going on?

Since ChatGPT came onto the scene in late 2022, test after test has proven vulnerable to the wiles of generative AI. The initial GPT-3.5 model was impressive enough, and the more advanced GPT-4 has shown an even greater proficiency for test-taking. Name a large, well-known test, and ChatGPT has probably passed it. In addition to bar exams, SATs, and AP exams, ChatGPT has also passed 9 out of 12 AWS certification exams and Google’s L3 engineer coding interview.

At HackerRank, we’ve seen firsthand how AI can bypass MOSS code similarity, the industry standard for coding plagiarism detection.

All of these sudden vulnerabilities can seem scary for those administering tests. How can you trust the answers you’re getting? If your tests rely heavily on multiple choice questions, which are uniquely vulnerable to large language models, how can you revise test content to be more AI resistant?

These developments are worrying for test-takers, as well. If you’re taking a test in good faith, how can you be sure you’re getting a fair shake? Interviewing is stressful enough without having to wonder if other candidates are seeking an AI-powered advantage. Developers deserve the peace of mind that they’re getting a fair shot to showcase their skills.

What’s our stance?

At HackerRank, we’ve done extensive testing to understand how AI can disrupt assessments, and we’ve found that AI’s performance is intrinsically linked with question complexity. It handles simple questions easily and efficiently, finds questions of medium difficulty challenging, and struggles with complex problems. This pattern parallels most candidates’ performance.

However, creating increasingly intricate questions to outwit AI isn’t a sustainable solution. Sure, it’s appealing at first, but it’s counterproductive for a few reasons.

- First, this could potentially compromise the core value of online assessments, weakening the quality of talent evaluation. More complex questions don’t automatically translate into better signals into a candidate’s skills. They take longer to answer, which translates into either longer assessments, or fewer questions (and fewer signals to evaluate).

- Second, it would certainly degrade the candidate experience by focusing on frustrating AI rather than on giving developers a chance to showcase their skills. Losing sight of the developer experience tends to diminish that experience, which could result in more candidates dropping out of the pipeline.

- Third, it would set up a game of perpetual leapfrog as more advanced AI models solve more complex problems, and even more complex problems are created to trip up more advanced AI.

Instead, our focus remains on upholding the integrity of the assessment process, and thereby ensuring that every candidate’s skills are evaluated fairly and reliably.

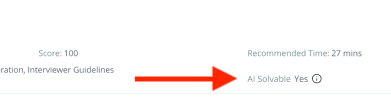

Introducing our new AI solvability indicator

Upholding integrity means being realistic—and transparent. This means acknowledging that there are assessment questions that AI can solve. And it means alerting you when that is the case, so you can make informed decisions about the content of your assessments.

That is why we are introducing an AI solvability indicator.

This indicator operates on a combination of two criteria.

- Whether or not a question can be fully solved by AI.

- Whether or not that solution is picked up by our AI-powered plagiarism detection.

If a question is not solvable by AI, it does not get flagged. Likewise, if a question is solvable, but the answer triggers our plagiarism detection model, it does not get flagged. The question may be solvable, but plagiarism detection ensures that the integrity of the assessment is protected.

If a question is solvable by AI and the solution evades plagiarism detection, it will get flagged as AI Solvable: Yes. Generally, these questions are simple enough that the answers don’t generate enough signals for plagiarism detection to be fully effective.

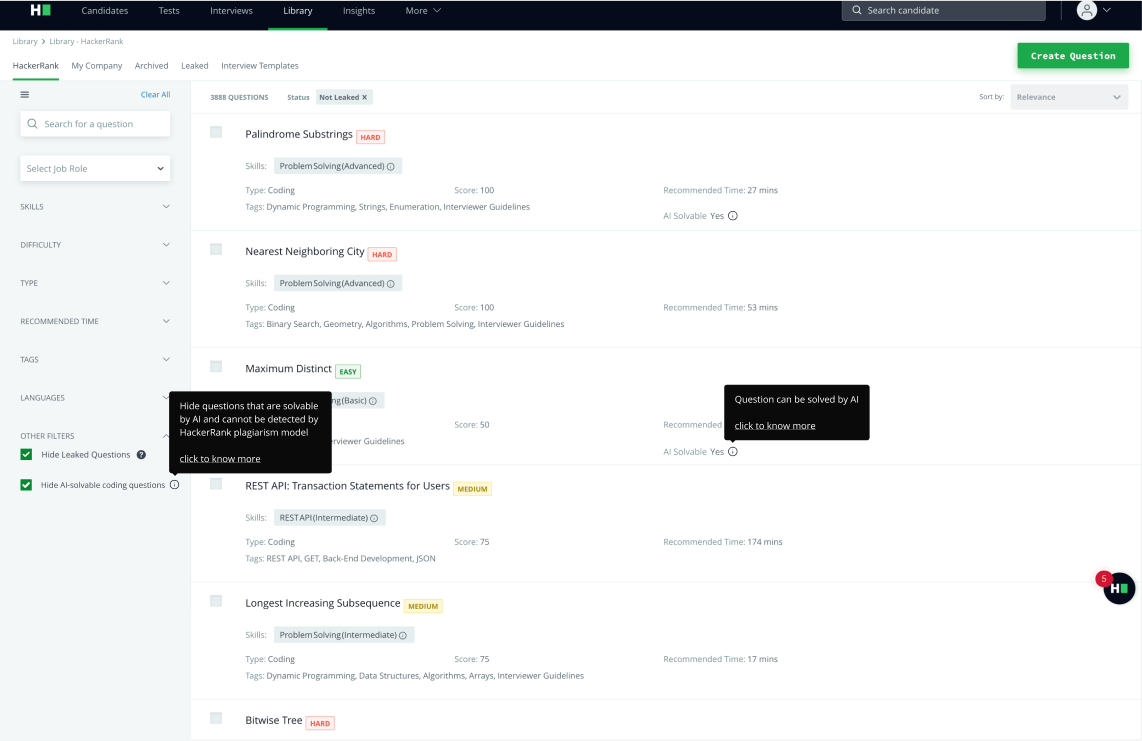

Questions flagged as AI solvable will be removed from certified assessments, but may still appear in custom assessments, particularly if those assessments have not been updated in some time.

If you’re browsing through questions, you can also select to hide all AI-solvable questions, just as you can hide all leaked questions.

What else is HackerRank doing?

Beyond the transparency of the AI solvability indicator, we are building in measures to actively ensure assessment integrity. These include:

- AI-powered plagiarism detection. Our industry-first, state-of-the-art plagiarism detection system analyzes dozens of signals to detect certain out-of-bounds behavior. With an incredible 93% accuracy rate, our system repeatedly detects ChatGPT-generated solutions, even when they’re typed in by hand, and even when they easily bypass standard detection methods.

- Certified assessments. Let us handle assessment maintenance. Our certified assessments are out-of-the-box tests curated and maintained by HackerRank experts. We take on all the upkeep, including keeping content current and flagging and replacing any leaked or AI-solvable questions.

- Expanded question types. We’re expanding question types with formats and structures that are more resistant to AI solutions, such as projects and code repositories. These have the added benefit of being extremely close to the real-world environments and challenges your candidates would face in their daily work, giving you a true-to-life evaluation of their skills.

What can you do?

No matter where your company stands on AI, we believe it’s best to be transparent about its capabilities. Yes, AI can solve simpler technical assessment questions. We prefer you to know that so that you can take informed actions.

So what can you do? Every company is coming at AI in their own way, so there’s no one right answer. What works for one organization may not work for another. But broadly speaking, here are some steps you should consider to protect the integrity of your assessments.

- Stay informed. Yes, some technical questions can be solved by AI. At HackerRank, we help ensure assessment integrity through our market leading plagiarism detection and through solvability indicators that give you the transparency you need to deliver fair assessments.

- Replace solvable questions. When a question in one of your assessments is flagged as AI solvable, a simple course of action is to replace it with an unsolved question from our library. We also recommend looking at the type of question you’re asking, and what you’re hoping to learn from it. It may make sense to replace a solvable question with an entirely different question type.

- Embrace new question types. Newer question formats like projects and code repos are more resistant to AI, and their close resemblance to real-world scenarios gives you a truer-to-life evaluation of how a candidate would perform in their daily work.

- Take advantage of certified assessments. Don’t want to deal with maintaining and updating assessments? Let us do it for you. With certified assessments, HackerRank experts handle all of the content curation and monitoring, including replacing any leaked or AI solvable questions.

- Leverage HackerRank professional services. Have special needs for your assessments? Engage our experts for monitoring and content creation customized to your specific business objectives.

Ensure assessment fairness and your own peace of mind

Ensuring assessment integrity in a time of rapidly advancing AI can seem difficult. You can only dial up question complexity so far before it starts to degrade the assessment experience and even compromise the value of assessments in finding qualified talent. That’s why we’re focused on reinforcing key pillars of assessment integrity, including our industry-leading AI-powered plagiarism detection, certified assessments, and solvability indicators that give you the transparency and signals you need to make the best decisions about your assessments.

Be sure to check out our plagiarism detection page to go into more detail about how HackerRank is ensuring assessment integrity.